In this post, we will explore how Personio has provided AWS support to the Security Knowledge Framework — one of OWASP’s core projects — to deploy it as an internal service and bring Development and Security teams closer together.

Why Security Knowledge Framework?

At Personio, we continuously review and enhance our processes, and our Security team is no exception. The Security Awareness initiative aims to ensure that all of our employees, and especially our engineers, understand and use key security concepts. This allows Personio to provide not only a great product, but also a secure one.

As part of our ongoing improvements, we considered several options to provide a hands-on training service where engineers could continuously practice their security skills and further understand the security concepts and vulnerabilities explained across our security training sessions. More specifically, we needed a solution for helping them understand how their actions affect our product, and how they can appropriately apply security countermeasures as needed.

After proper research and consideration of different options on the market, we deemed that the Security Knowledge Framework, the open-source project from OWASP, would be the tool that most suited our use case and needs. As an added bonus, integrating this framework also provided an opportunity for the Security team to align more closely with our Engineering counterparts while helping identify Security Champions across the organization.

Security Knowledge Framework on AWS

Hybrid Clustering

Although SKF was the solution that best suited our needs, we quickly realized it did not support our cloud provider, Amazon Web Services.

To avoid ending up with tech debt deviating from our current infrastructure, and following our #SolutionsOverProblems company operating principle, we deemed it worthwhile to invest some time in making it work on top of AWS Elastic Kubernetes Service clusters. Another argument in favor of this time investment was its integration with AWS Fargate, a feature which allows it to run serverless Kubernetes Pods, avoiding the hassle of provisioning, managing, and scaling Kubernetes Nodes.

To be able to understand our approach to this enhancement, it is crucial to first understand how one of SKF’s core features works, the Labs.Security Knowledge Framework is deployed via Kubernetes, an open-source container orchestration system for automating software deployment, scaling, and management of containerized applications.It deploys all its resources in Docker containers within a Kubernetes cluster, such as the web frontend, the backend API service and RabbitMQ worker, a RabbitMQ server, the database, et cetera.

And, of course, as the Labs feature deploys vulnerable machines on demand, for security purposes you want to keep them in a separate cluster, isolated from the rest of SKF resources.Once you have your main cluster deployed, when starting a practice lab, the backend will call the Kubernetes API of your Labs cluster in order to create the Pods, Ingresses, and all necessary resources to have a sandboxed environment to practice.

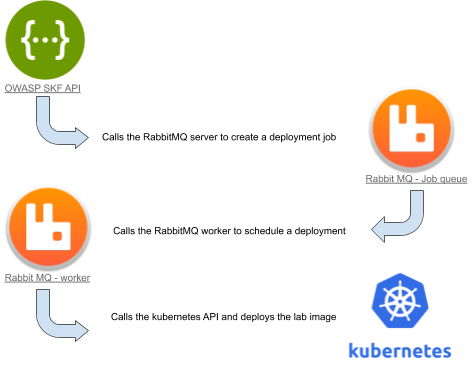

Under the hood, it works like this:

Now that we understand how SKF works, we can quickly see that AWS provides us with a great way to efficiently deploy this tool, AWS Fargate.

One of the main perks of supporting AWS is Fargate, an AWS feature integrated with Amazon’s EKS that allows it to run serverless pods. These pods will automatically be provided with right-sized compute capacity to run any application. Since SKF provides more than 150 labs which have very different needs in terms of computational resources, Fargate is the perfect solution to make sure SKF will be scalable to different kinds of practice laboratories while keeping the costs down.

However, Fargate has several limitations:

- There is a maximum of 4 vCPU and 30Gb memory per pod.

- Currently, there is no support for stateful workloads that require persistent volumes or file systems.

- You cannot run Daemonsets, Privileged pods, or pods that use HostNetwork or HostPort.

- The only load balancer you can use is an Application Load Balancer.

As SKF is deployed with Nginx Ingress Controller to manage the ingresses that the application and each practice laboratory will need, this becomes a problem because Nginx Ingress Controller needs a privileged pod to run properly.In order to work around this within AWS, we set up a Hybrid cluster with both EC2 and Fargate compute resources.This allows us to run the Nginx Ingress Controller within a small EC2 Managed Node, while keeping the advantage of running all our workloads under Fargate nodes.

SKF also makes use of a Persistent Volume Claim for its MySQL service. With our new approach, we’ll have to take into account that unless we configure an EFS CSI driver, Fargate won’t be able to connect to our PVC, so we can either configure it or deploy the MySQL service in a different namespace that doesn’t match our Fargate profile so that it deploys under our EC2 Nodegroup, as our Nginx Ingress Controller.

Per-Cluster Authentication

Once both clusters are up and running with all resources configured, it’s time to tackle the authentication that the Main cluster needs to deploy within the Labs cluster. There are several approaches for this, which will strongly depend on the cloud provider you choose, as every platform provides a different authentication strategy and tooling for Kubernetes clusters.

As can be seen in the official documentation of SKF to install in Azure, it’s straightforward to configure authentication between resources in two clusters, whereas AKS provides certificate-based authentication by default, embedding the certificate into the kubeconfig file.

We can see how, within the Google Cloud Platform installation docs, it is necessary to configure a Google IAM Service Account with permissions to manage the Labs cluster, and generate Service Account Key to embed into SKF-backend pod.

In AWS, we also had to tackle the authentication between clusters. The best authentication solution would be to use an AWS IAM Role to authenticate against the Labs K8s-cluster, with the main advantage of not having to work with long-term IAM credentials. Even though it is probably the most secure solution, it requires an aws-cli tool installed in Docker image of SKF backend, which means we’d need to create an AWS-specific version of this image and support it on upstream.

To align with the current SKF model and keep it as cloud agnostic as possible, we opted instead for creating a Kubernetes Service Account for the Labs cluster, and embedding the Service Account token into the Kubeconfig file that SKF uses to authenticate against the Labs Cluster.

Contribution

Given our Core value of #SocialResponsibility, we wanted to contribute back to OWASP’s Security Knowledge Framework with AWS Support.

After reaching out to OWASP and explaining the work we have done in adapting SKF to have full-AWS support, they were excited to recognise Personio’s effort as OWASP’s SKF supporters.

With added AWS support, SKF now enables organizations to enhance their security processes and Secure Software Development Life Cycles.Moreover, we are humbled to have become official supporters of an OWASP project and contributed back to the security community.

We are really excited to see how this new service will help Personio and its customers reach new heights.

If you are planning to roll out the Security Knowledge Framework and want to learn more about it, go to their official website, where you will find all their documentation and even a Live Demo to see how it works.

And if you are excited to work in challenges like these, check out our open positions and join us at Personio — the most valuable HR tech company in Europe!

Details

- Author: Carles Llobet

- Date: 21 September, 2022

- Medium URL: https://medium.com/inside-personio/deploying-security-knowledge-framework-personio-144fa759de6e